January 29, 2026

- A Senior QA’s Confession from the Trenches

I’ve been in QA long enough to remember when “automation” meant a folder full of brittle Selenium scripts and late-night Slack messages about broken test cases. Every release felt like a balanced act between quality and deadlines. We’d patch, rerun, revalidate, and still miss something critical, not because we weren’t good at our jobs, but because the system itself was flawed.

There was a week last year that made me seriously rethink my entire testing philosophy. We have just finished a feature sprint for a complex payment workflow. Everything looked clean on paper - new APIs, updated UIs, and regression coverage rechecked. But three days before release, we found a defect that had silently propagated through two microservices and corrupted transaction states. Fixing it took 10 engineers, two sleepless nights, and a delayed deployment. The frustrating part? The bug was traceable. The kind of thing we should have caught early but didn’t because our test suite wasn’t evolving fast enough.

That was my break point. I realized that traditional automation wasn’t enough anymore. It wasn’t even automation in the true sense, it was scripted for repetition. We weren’t scaling intelligence; we were scaling maintenance overhead.

The Shift: When AI Started Writing the Tests We Forgot To

When I first heard about Testspell, I was skeptical. “AI-driven test generation” sounded like marketing fluff. But curiosity got better than me, so we integrated it into a sandbox branch, no production risk, no expectations. Within hours, my perception changed.

Testspell didn’t just execute tests; it understood the intent behind our code and requirements. When we committed new API endpoints, it automatically generated test cases, validating payload structures, checking edge-case responses, even flagging mismatched headers. I remember a specific example from our authentication module: we added a two-step verification endpoint, and Testspell instantly created validation tests for response latency, payload integrity, and timeout scenarios. These were cases my team hadn’t even documented yet.

That was the “aha” moment. It wasn’t about replacing testers; it was about augmenting us. The tool filled the coverage gaps we didn’t know existed.

The Reality Check: Manual vs. Machine

Here’s the harsh truth: our QA cycle before Testspell averaged 12 days. Requirement-to-test time alone took nearly five days, with countless hours lost to script debugging and test data updates. The result? Our regression cycle was always running behind schedule, forcing us into the dreaded “weekend release crunch.”

After integrating Testspell into our CI/CD pipeline (in our case, Jenkins and GitHub Actions), the difference was immediate. The same process now takes six days from end to end. Requirement-to-test time dropped to one day. Regression coverage nearly doubled without expanding the QA headcount.

One of our developers joked, “It’s like having a junior tester who never sleeps, and actually writes clean code.”

Testspell automatically runs on every pull request, generating relevant test cases and validating endpoints before merge. It reports coverage gaps in real time, so QA and dev don’t need to chase each other on Slack anymore. That tight feedback loop saved us from multiple release-day crises.

Defect Leakage: The Silent Killer

Before AI testing, the defect leakage was our Achilles' heel. No matter how rigorous our manual checks were, around 8% of the defects still slipped into production. Some were minor; others triggered support tickets and customer escalations that hurt credibility.

After six months with Testspell, our defect leakage dropped below 2%. The difference came from the AI’s ability to predict failure points based on historical test data and new code patterns. In one instance, it flagged a data-type mismatch in a healthcare project that would have caused downstream billing errors. That detection alone justified the entire rollout.

Adapting at the Speed of Code

Change used to be QA’s worst enemy. Every API rename or schema update meant broken scripts and hours of rework. With Testspell, adaptability is automatic. Its AI engine continuously scans both requirements and code deltas, regenerating test cases when something changes.

A concrete example: when our backend team refactored the product catalog service, it would’ve normally taken us a sprint just to fix the test suite. Testspell regenerated the affected test cases within minutes, validated new payloads, and executed them on the next build cycle. Not a single test failure was carried forward.

For me, that was revolutionary, not because it made QA faster, but because it made QA resilient.

The Business Lens: ROI Beyond Metrics

From a leadership standpoint, automation is only valuable if it moves the business needle. Testspell did exactly that.

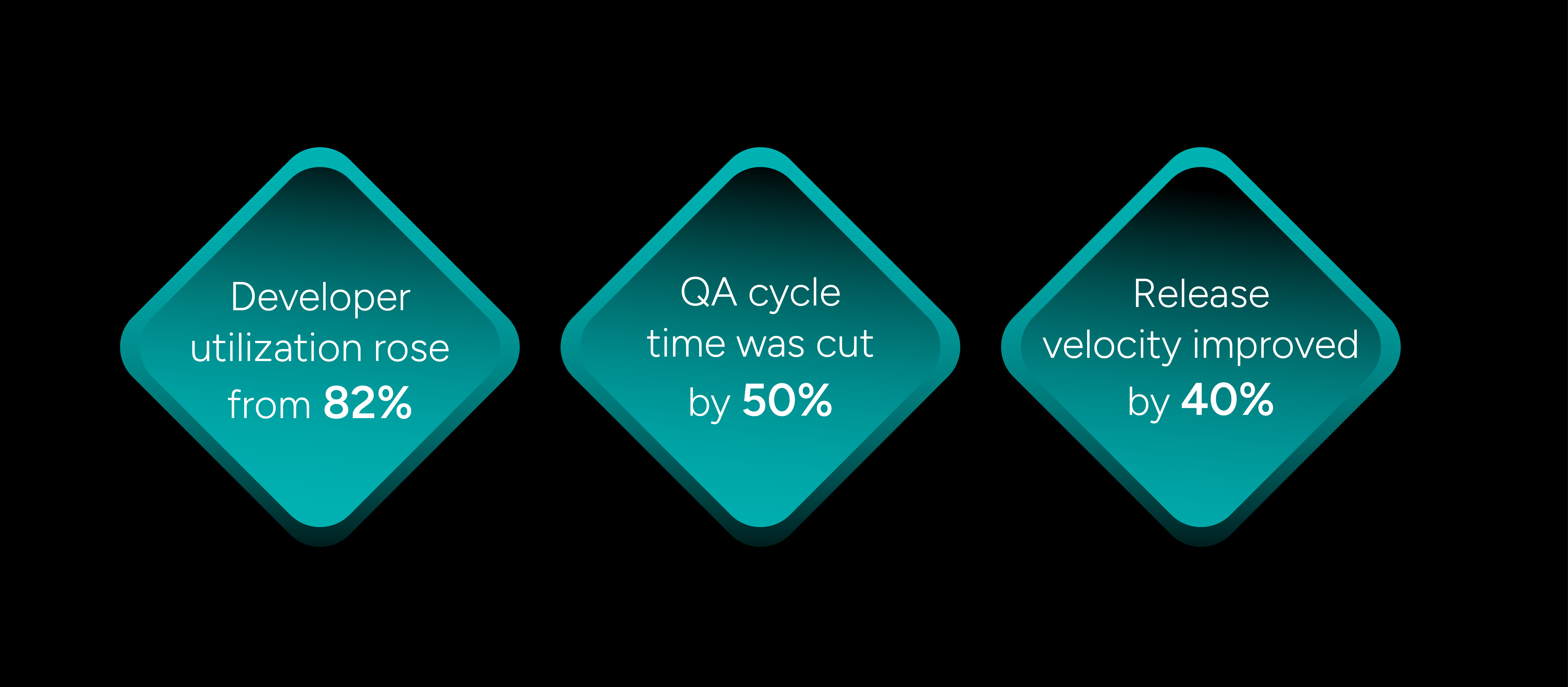

- Developer utilization rose from 65% to 82%.

- QA cycle time was cut in half.

- Release velocity improved by 40%, directly impacting time-to-market key product updates.

And unlike our previous tools, Testspell didn’t require specialized scripting knowledge or dedicated QA automation engineers. It integrated cleanly into our DevOps workflow, operated securely within our environment, and produced measurable, repeatable gains.

For a mid-size enterprise like ours, that translates to faster delivery, lower operational overhead, and a tangible morale boost for both QA and development teams.

The Cultural Shift: From Test Execution to Test Intelligence

I used to think automation would make QA boring. That AI would take away the part where we “hunt for bugs.” Turns out, it did the opposite.

By offloading the repetitive, mechanical side of testing, our team actually had time to focus on exploratory testing, user experience, and compliance validation. Instead of firefighting broken scripts, we started designing better test strategies.

In the first quarter post-Testspell, we identified three major UX regressions, not because the AI missed them, but because we finally had the bandwidth think beyond the checklist. The irony? AI didn’t replace QA intuition, it made it valuable again.

Why AI Testing Is No Longer Optional

The future of QA isn’t manual vs. automated. It’s about intelligence vs. inertia. As systems grow complex, microservices, distributed APIs, cloud-native stacks, the margin for manual inefficiency vanishes.

AI-driven platforms like Testspell aren’t a luxury anymore; they’re survival tools for modern engineering. They bring precision, speed, and adaptability to testing at a scale humans simply can’t match.

If I could tell my younger QA self-one thing, it would be this:

“Automation scripts were a bridge. AI testing is the destination.”

With Testspell, we no longer chase coverage. We define it, evolve it, and ship with confidence that our codebase can keep up with change.

Final Reflection

Quality Assurance has always been about trust, trust in your product, your process, and your people. For the first time in my career, trust feels backed by intelligence, not just effort.

I’m no longer afraid of release nights. I’m not worried about API changes or last-minute code pushes. Because now, our QA isn’t reactive. It’s predictive.

And that’s the future every engineering leader should be building toward, one where AI doesn’t just assist testing; it defines its standard.

.png)

.png)

.png)