February 2, 2026

TL;DR

Review fatigue is a growing problem in enterprise engineering teams. As codebases grow and delivery timelines shrink, reviewers are stretched thin, spending too much time on repetitive issues and not enough on strategic code quality. CodeSpell addresses this by embedding best practices directly into the developer workflow. It auto-generates clean, documented, and testable code, significantly reducing the manual effort needed during reviews.

The Growing Burden of Code Reviews

For large engineering teams, code reviews are both a safeguard and a bottleneck. They catch bugs, enforce standards, and mentor junior developers. But at scale, they become a drain.

Reviewers are expected to evaluate dozens of pull requests each week, often under pressure. Many of these PRs contain unclear logic, missing tests, inconsistent formatting, or simply too much change in one go. The mental load is high. Over time, this leads to review fatigue, a state where focus drops, feedback gets superficial, and critical issues are missed. Review fatigue slows down the entire development cycle, causes frustration among developers, and increases the likelihood of bugs making it to production.

Review Fatigue Isn’t Just About Volume, It’s About Repetition

Enterprise teams often deal with:

- Redundant code patterns

- Lack of standardized documentation

- Missing or low-quality unit tests

- Inconsistent use of internal frameworks or APIs

- Long PRs with limited context

The issue isn’t just the number of reviews, but the repetitive nature of them. When reviewers are repeatedly flagging the same types of issues such as indentation, naming conventions, missing docstrings, it’s clear the system isn’t working efficiently.

CodeSpell: A Proactive Approach to Code Quality

CodeSpell is positioned as more than an AI coding assistant, it’s an SDLC co-pilot. Instead of reacting to bad PRs, it helps prevent them. It does this by assisting developers inside their IDEs, long before code reaches a reviewer.

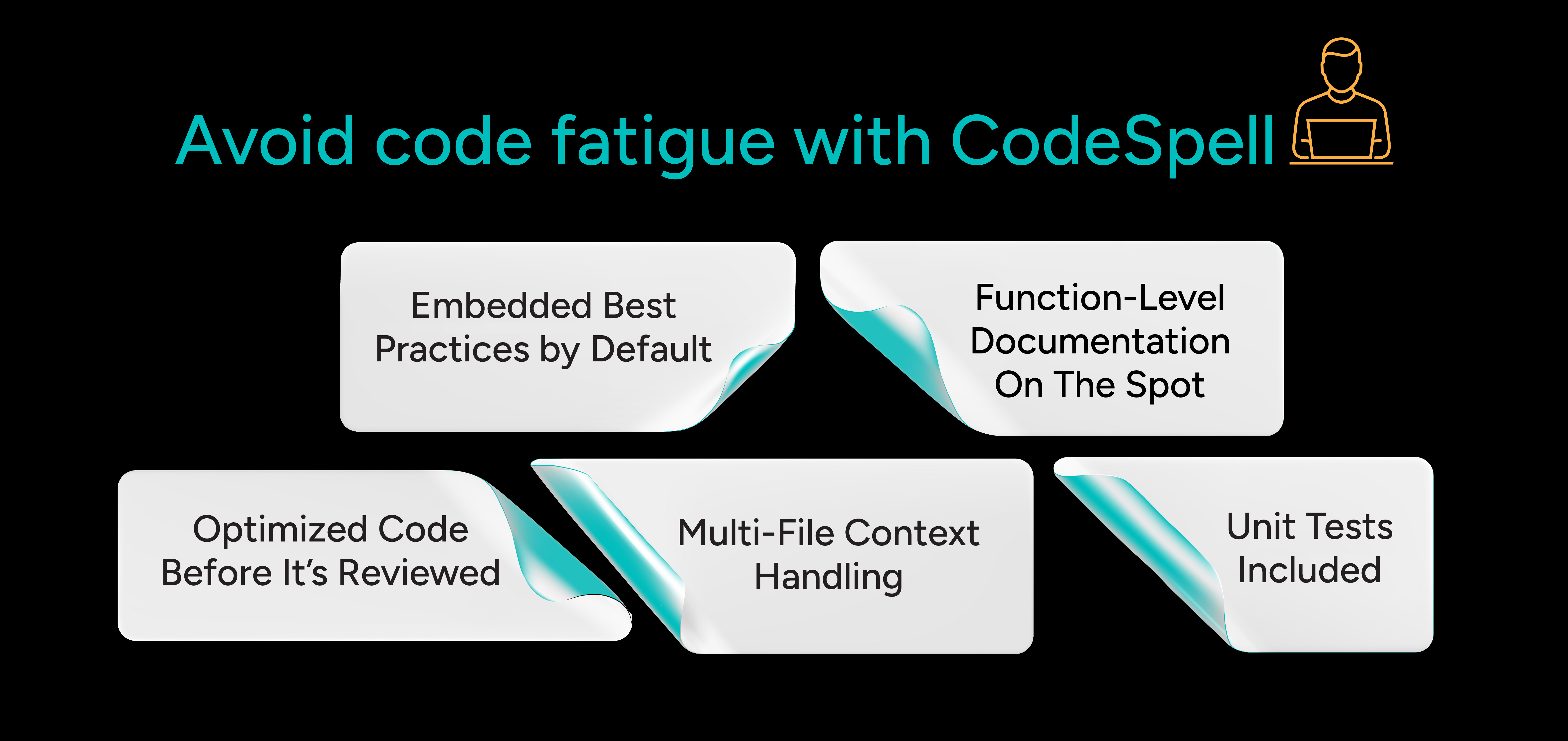

Here’s how it makes a tangible impact on review fatigue:

1. Embedded Best Practices by Default

CodeSpell doesn’t just generate code, it generates it based on predefined templates and organization-level best practices. This includes structure, naming conventions, error handling patterns, and more. Developers start with a high-quality base, reducing the need for reviewers to flag foundational issues.

2. Function-Level Documentation On The Spot

With a simple /doc spell or inline icon, developers can automatically generate detailed docstrings for their functions. This means reviewers don’t have to waste cycles asking, “What does this method do?” or “Can you explain the logic here?”

Documentation becomes a default part of the dev workflow and not a post-PR scramble.

3. Unit Tests Included

CodeSpell can auto-generate unit tests based on the function context. It selects the appropriate framework (e.g., Jest, JUnit, etc.), writes the test scaffolding, and ensures that every logic branch is accounted for. Reviewers no longer have to send the classic, “Please add tests” comment.

4. Optimized Code Before It’s Reviewed

Developers can use the /optimize spell or inline options to refactor long, messy functions into cleaner, modular code. This directly reduces reviewer effort and ensures that feedback focuses on business logic, not structural hygiene.

It’s especially useful for junior developers, enabling them to submit production-grade code confidently.

5. Multi-File Context Handling

One of the biggest challenges for reviewers is lack of context. CodeSpell allows developers to bring in multiple files (controllers, services, models) while asking the assistant for suggestions or documentation. This ensures the resulting code and any generated doc or test, it is context-aware and coherent.

The reviewer doesn’t have to jump between files to piece things together.

Resulting in More Focused Reviews

When CodeSpell is embedded in the team’s IDEs:

- Reviewers see cleaner, more consistent code

- Less time is spent on syntax, spacing, or missing docstrings

- Developers submit PRs with tests and explanations

- Review cycles shorten, often significantly

- Reviewers can focus on architecture, design decisions, and business logic

For CTOs, this translates to faster delivery, happier teams, and lower risk.

.png)